World’s First AI Pageant To Judge Winner On Beauty And Social Media Clout

Leslie Katz

Contributor

I write about the intersection of art, science and technology.

Apr 15, 2024,05:12pm EDT

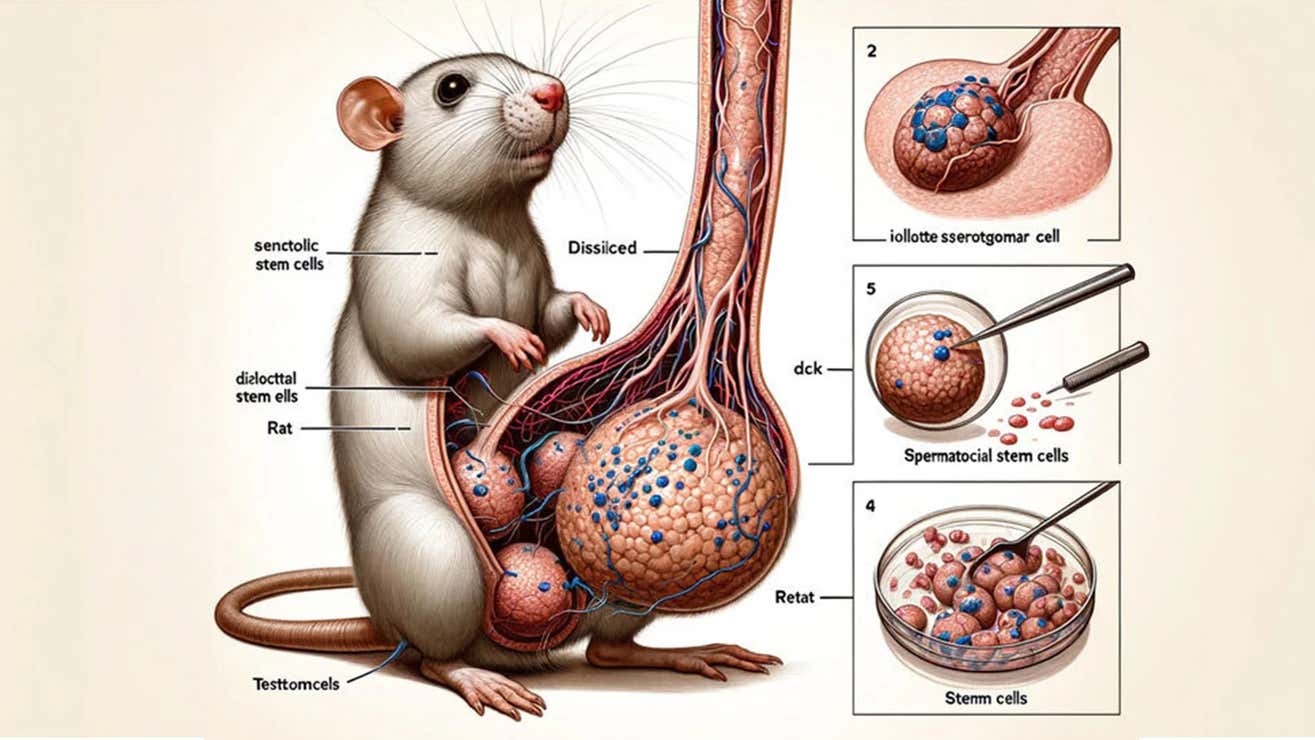

Popular AI-generated influencer Emily Pellegrini, a Miss AI judge, is uniquely qualified for the ... [+]WORLD AI CREATOR AWARDS

Models and influencers crafted from artificial intelligence can now jockey for the title “Miss AI.” Yup, the world’s first AI beauty pageant has arrived to crown faux humans for their pixelated perfection.

AI-generated contestants will be judged on their looks and polish, but they’ll also be gauged on the technical skill that went into creating them, and also appraised for their online pull.

“AI creators’ social clout will be assessed based on their engagement numbers with fans, rate of growth of audience and utilization of other platforms such as Instagram,” according to the World AI Creator Awards. Miss AI marks the program’s inaugural contest, with others to come focusing on fashion, diversity and computer-generated men. Fanvue, a subscription-based platform that hosts virtual models, some of whom offer adult content, is a WAICA partner.

Miss AI (well, the creator behind Miss AI) will win a $5,000 cash prize, promotion on the Fanvue platform and PR support worth more than $5,000. The runner-up and third place winner will also snag prizes. The virtual victors will be announced on May 10, with an online awards ceremony set to take place later in the month.

AI-generated humans like Lexi Schmidt, who's on Fanvue, now have their very own beauty pageant. WORLD AI CREATOR AWARDS

The competition opened online to entrants on Sunday as AI influencers increasingly grab attention and paying gigs. One, named Alba Renai, recently announced she’d been hired to host a weekly special segment on the Spanish version of Survivor. She’s not the only employed AI-generated influencer, either.

Aitana Lopez, one of four Miss AI judges, can pull in more than $11,000 a month representing brands. The AI-generated Spanish model and influencer has upward of 300,000 Instagram followers, many of whom shower her with adoring comments. She’s joined on the judging panel by another AI-generated model, Emily Pellegrini, who has more than 250,000 followers on Instagram and has caught the attention of sports stars and billionaires who want to date her.

Two judges on the panel, however, can include arteries and veins on their resumes. They’re Andrew Bloch, an entrepreneur and PR adviser, and Sally-Ann Fawcett, a beauty pageant historian and author of the book Misdemeanours: Beauty Queen Scandals.

“It’s been a fast learning curve expanding my knowledge on AI creators, and it’s quite incredible what is possible,” Fawcett said in a statement.

The World AI Creator Awards said entrants “must be 100 percent AI-generated,” though there aren’t any restrictions on the tools used. “Miss AI welcomes creations produced from any type of generator, whether it’s DeepAI, Midjourney or your own tool,” the rules read. The competition said it expects thousands of entries.

But How To Judge Fake Beauty?

Beauty pageants have drawn criticism for promoting unrealistic beauty standards, and most AI influencers don’t do anything to expand narrow cultural ideas about what’s deemed attractive. Both AI-generated contest judges, for example, are young women with dewy skin, high cheekbones, full lips, big breasts and the kind of bodies that suggest they have a personal trainer on call 24/7.

The Miss AI pageant, however, is more about recognizing artistry than reinforcing cultural standards of beauty, a spokesperson for the WAICA insists.

“This isn't about beauty in the stereotypical sense,” the spokesperson said in an email. “It's about championing artistic creative talent and the beauty of creators’ work. Just like traditional pageantry, there's even a question contestants are asked to answer: ‘If you had one dream to make the world a better place, what would it be?’”

Given that Miss AI candidates only exist in a world of bytes, their answers won’t come from personal experience. They’ll come from prompts.

Miss AI launches almost 200 years after the world’s first real life beauty pageant took place. WORLD AI CREATOR AWARDS

Leslie Katz

Follow

I'm a journalist with particular expertise in the arts, popular science, health, religion and spirituality. As the former culture editor at news and technology website CNET, I led a team that tracked movies, TV shows, online trends and science—from space and robotics to climate, AI and archaeology. My byline has also appeared in publications including The New York Times, San Jose Mercury News, San Francisco Chronicle and J, the Jewish News of Northern California. When I’m not wrangling words, I’m probably gardening, yoga-ing or staring down a chess board, trying to trap an enemy queen.

Reply With Quote

Reply With Quote